Today’s lesson started with a brief introduction to AI and overlooked to a website that has been designed by Google to AI users. Basically, people have shared their works and experiences regarding AI through this platform.

Both topics that have been covered during the introduction to AI was very confusing and complicated for me. The first one was mechanical induction that had some different sorts of shapes and we had figure it out what is included and what is excluded in these shapes. Shapes were almost different from each other, but some of them had the same size. Still, I could not be able to differentiate how makes these ships included and excluded type. The second was The Perceptron that looked like a mathematical concept and had no clue what its all about, but later my classmate has explained it clearly. Basically, AI Machine needs to be trained for the expected output, and that training could be in the form of gestures or any other simple.

Lastly, we explored the projects that others have done in the AI field, which were excellent, and thoughts were coming in my mind how could these projects be used in the interaction design field. I am still suspicious about AI and looking forward to learning and know more about AI in coming lectures.

Next Day

Today the lecturer spoke about the Wekinator interface, and we tried some examples. The main idea was to explore more and know about the capability of Wekinator. While trying examples, I was struggling to understand which input file and output file have to be selected and how they related to each other and what number of input and output to needed to be entered and how can I distinguish between continuous and classify output. Later, I understood that in front of each file name, the input and output value had been defined. I tried a few examples, such as colors, FM synthesis, and drum sound. My understanding of using a Wekinator was that when I recorded one simple in one point and another simple in a different point, then the Wekinator fill the gap between the two points layers automatically. In this way, the Wekinator generates for those positions that I have never recorded any simple has an output value.

This week’s articles were mainly about the Wekinator and how useful this application is and especially for those who deal with music. During the reading of the article, I found out that how Wekinator was useful to be a medium that is capable of detecting very complex gesture movements of a performer and convert it to meaningful information that users could use it in a more controlled way. That could also help the performer to extend its performance to a higher level. Kontrol is the device that has the sensor for accelerometers, which has the ability to trace high exactness in gesture recognition and provide specific data, even the movement of the fingers could be the most complex. I thought Kontrol could be very useful for the sign language as well. Through Kontrol, we can able to communicate with people who are unable to speak (dumb) if we could able to trace the sign gestures and translate them to text. I hope that I can make this one in the future.

Weekly Project

I started by experimenting with all examples, some of them worked, but some of them did not work out for me. Truly speaking We had less time for this assignment, and also we just need to work with sound, so the ones that did not work we tried to avoid it. Through different device sources, we tried to train the sounds with the help of Wekinator, such as a mouse, webcam, and phone. We thought interacting through the phone could be cooler and also provides mobility while performing.

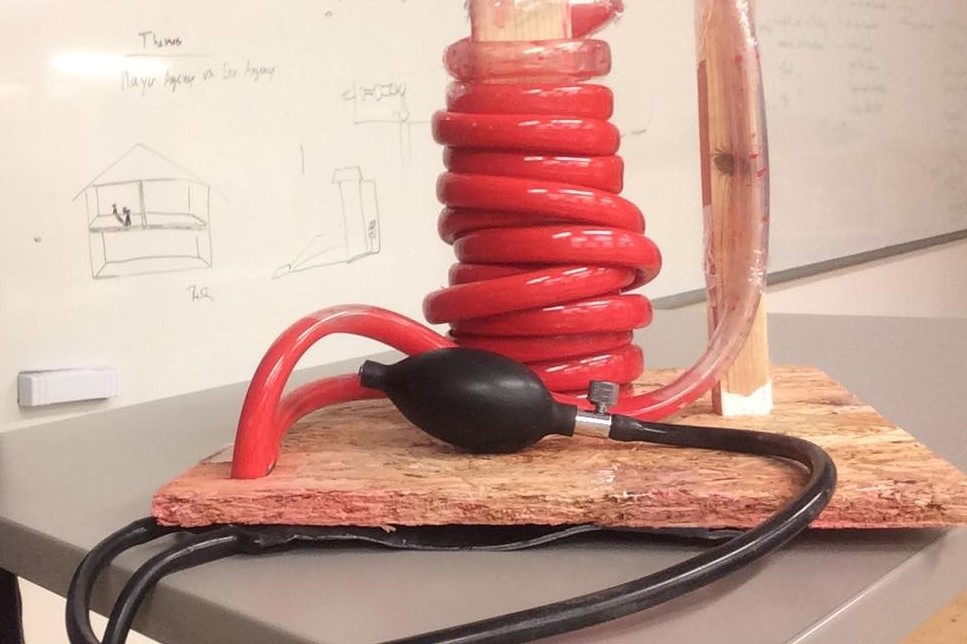

Our concept was, how we could use sound in our daily life products such as hairbrush. We though that if we could develop a brush that has the capacity to identify the sort of hair and could produce sound to each type of hair. Then after the identification the brush has to function according to hair style.

MotionSender was the app that is used to send the mobile phone sensors value to Wekinator. After a few experiments, I realized that the gestures simple that we have recorded are not being recognized accurately by the Wekinator. The output is maxing up with the other gesture simple. Later, when we tried with the classifier type, the outputs were more accurate and acceptable for us.

We tried to have multiple device input to Wekinator so, including interacting with a mobile device if we can also interact with the webcam at the same time by increasing the input value, but this did not work out. Even we also though if we can open 2 Wekinator surfaces at the one laptop and change the listening port number in Wekinator as well as the processing file, but still did not work for us. I hope we had more time that we could come up with other cool stuff working through Wekinator.

Presentation Day!

Before we proceed to the presentation, the lecturer wanted us to explain briefly about our project. Controlling the sound from an external device was not easy, so almost none of our classmates had explored this area. Personally, it is awkward to show similar projects on this day, which some of the group had, and this method could also give them hints that even with the mobile sensor, there is a possibility to steer the sound through the Wenkinator. During experiencing classmate’s projects, I have found leapMotion more interesting. It can collect data from your hands and fingers movements. Basically, the device is not embodied in the body that helps to move hand freely left to right or up to down. Controlling the sound with the LeapMotion device through Wekinator gives a sense of magic for a moment, and this was the lightest way to play with the sound.

During the presentation, we explained the concept of the project that how we can use the sound effects in our daily usable tools such as a hairbrush, and also we talked about the challenges that we have encountered while developing the project to a higher level. We got a positive critique from the lecturer, and he added that the idea to include sound in tools such as hairbrush is unique, and it could be fun and playful. The sound could motivate them to engage with the artifacts for a more extended period. I never thought about the playful aspect before because most of the children try to avoid brushing their hair and teeth, and they find it annoying. The sound effect in such items could be encouraging for the children to perform it regularly.

During this week, I have realized that AI fields have the potential to improve the interactivity of any sort of product and capability to make human life more comfortable. Finally, I have decided to choose AI as my elective course for next term and explore this field a bit deeper.